A Vision for AI-Augmented Software Development

Modern software organisations are adopting agentic software development lifecycle (ASDLC) practices. We now orchestrate agents that build the code on our behalf. This document shows how to do this successfully.

Why This Matters: The software development lifecycle is the tightest constraint for most technology organizations—the ability to create software limits business growth. Agents remove this constraint. When code generation becomes unlimited, we unlock novel ways to improve how we work.

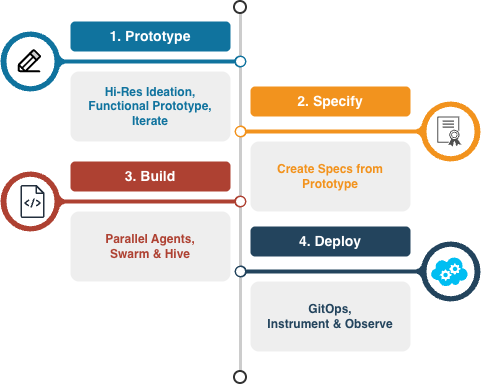

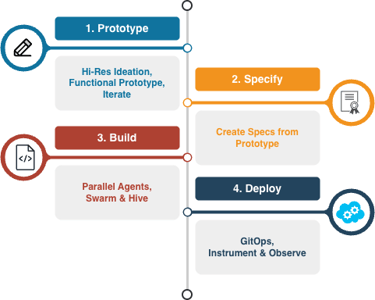

Core Objectives: Everything becomes code, stored in Git, kept fresh and in sync. We couple fast prototyping with rigorous engineering through four phases: Prototype → Specify → Build → Deploy. Agents self-service, work in parallel, and operate within guard rails. Humans focus on creative problems; agents handle repetitive tasks.

Expected Outcomes: Development cycles compress from two-week sprints to one-hour sprints. Humans shift from doing all work to focusing on thinking. Agents handle designing and coding. Trust becomes our key metric, proven through comprehensive testing. Test to trust. Then trust the code.

Why Now? The Case for Change

Where are we? The SDLC is the tightest constraint for most technology organisations. Traditional practices—humans doing all thinking, designing, and coding—create the bottleneck.

The challenge: write code customers love whilst maintaining engineering rigour. Vibe coding delivers speed but lacks repeatability. Engineering delivers quality but moves slowly. The four-phase workflow enables both.

What's changed? Agentic AI has passed a tipping point and everyone has noticed. Easy tools enable us to vibe ideas into designs and code; we can chat with an agent and get functioning code back. We are rapidly getting to the point of giving an agent a task and getting functioning code back.

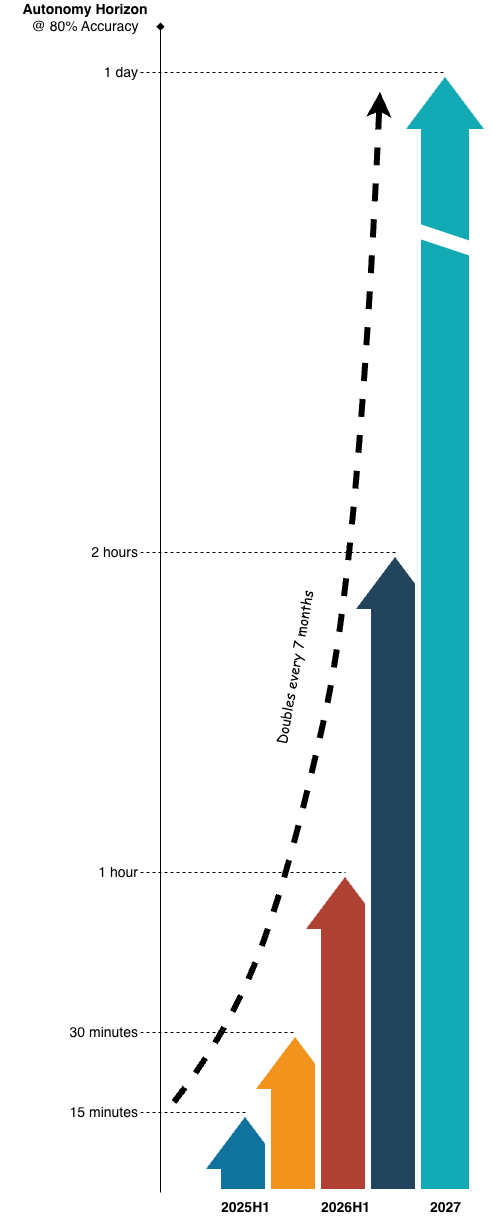

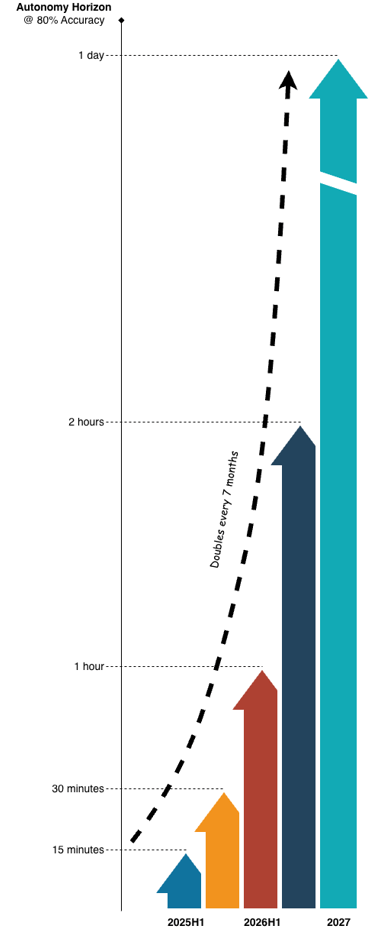

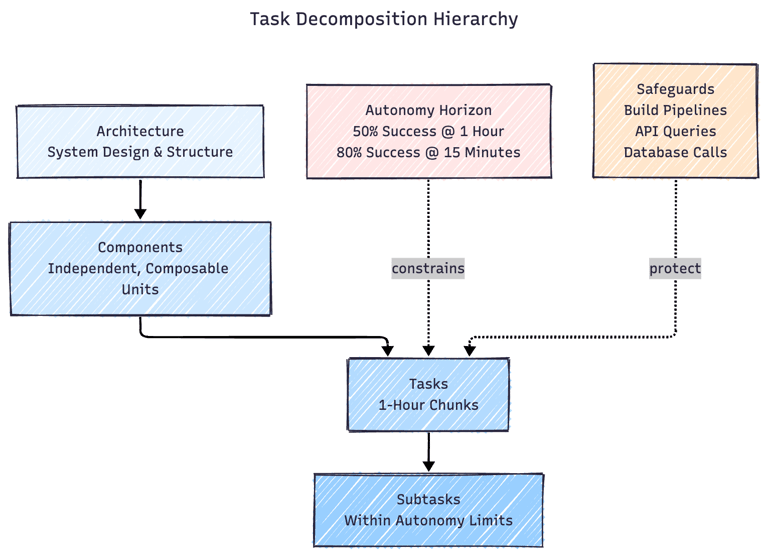

The autonomy horizon defines how long agents can do work reliably. Current frontier models handle tasks up to one hour at 50% success rates, dropping to fifteen minutes at 80% reliability. This capability doubles every seven months. By 2027, agents may handle week-long tasks autonomously. The boundary between what agents can do alone versus what needs human oversight moves predictably upward. Human involvement becomes about specifying the work in the way that agents can execute and produce reliable results.

Why now? Organisations that master agentic development first will gain significant competitive advantage. The business value is clear: remove the SDLC bottleneck, and the entire business accelerates. More features. Faster iterations. Higher quality. Lower costs. Technology becomes a growth enabler rather than a constraint.

The Vision: Four Pillars underpinning AI-Augmented Development

Good does not look like fewer developers doing the same amount of work and taking the same time over it. Good looks like doing the same amount of work quicker because agents are handling repetitive tasks whilst humans focus on creative problem-solving. Good looks like specifications that drive development, testing that builds trust, and deployments that just work. Good looks like prototypes becoming production code in days, not months, value delivery in weeks not quarters.

This vision rests on four pillars: a clear workflow, an integrated toolchain, new ways of working, and measurable success criteria

Pillar 1: The Four-Phase Workflow

The new DevX workflow transforms how we build software through four distinct phases: Prototype → Specify → Build → Deploy. Each phase has a clear purpose and transitions cleanly to the next.

Phase 1: Prototype

— Vibe code high-fidelity ideas. Build functional prototypes using appropriate tools (Windsurf for UIs, Jupyter for models, OpenAPI for services, Terraform for infrastructure). Iterate based on stakeholder feedback until the approach feels right. The prototype perfects design intent; it won't reach production.

Phase 2: Specify

— Transform prototypes into comprehensive specifications. Prototype code will be messy and intertwined—that's expected from rapid iteration. Don't refactor it. Instead, extract clear specs that capture the perfected design: contracts, schemas, behaviours, constraints, performance targets. Version these specs in a registry. They become the source of truth that drives development, orchestrates agents, and validates output.

Phase 3: Build

— Agents generate production code from specifications. Run parallel agents (swarm and hive patterns) in the cloud. Updates appear in Slack and Jira. Test everything continuously: code against specs, integration points, model performance, infrastructure compliance. Fix issues in each PR before merging. Everything becomes code: application logic, APIs, data pipelines, ML models, containers, charts, dashboards, schemas, batch jobs, MCP servers.

Transitions

Each phase produces artefacts that feed the next. Prototypes become specs. Specs drive builds. Builds trigger deployments. The workflow is linear but iterative; feedback loops exist within each phase, not between them.

Phase 4: Deploy

— GitOps automation executes deployment. Changes in GitHub trigger build tasks: update Helm charts, rebuild containers, deploy models, refresh dashboards, migrate schemas, call external APIs. Deployment becomes algorithmic, reliable, fast.

Pillar 2: Agentic Toolchain

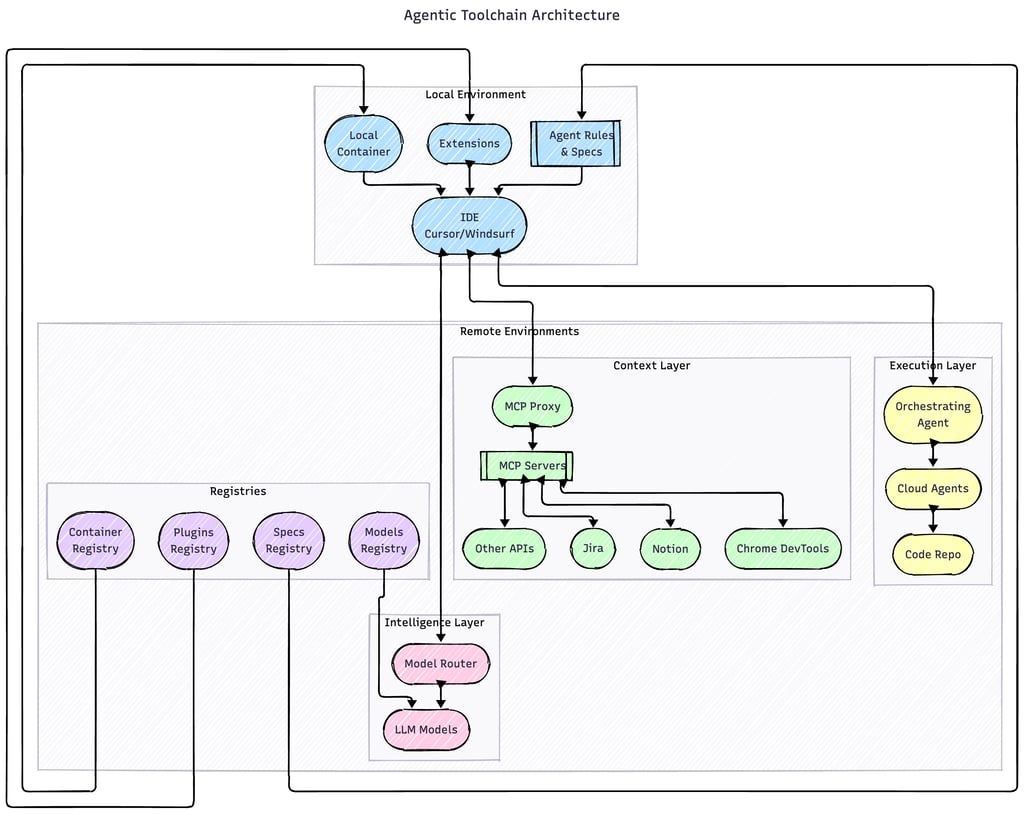

The agentic toolchain connects local development environments with cloud-based agents, models, and registries. This architecture enables developers to work with AI assistance whilst agents handle parallel build tasks in the cloud.

Local Environment

— Developers work in their IDE (Cursor, Windsurf) with extensions that connect to cloud services. Rules and specs guide AI behaviour. Local containers provide isolated development environments. The IDE becomes an orchestration point more than a code editor.

Context Layer

— MCP (Model Context Protocol) servers provide agents with access to organisational context: Jira tickets, Notion documentation, Google Drive files, other APIs. The MCP Proxy acts as a gateway, managing authentication and access scopes. Agents understand the full context of their work, not just the code.

Intelligence Layer

— Certified LLM models (Claude, GPT-4, etc.) power agent reasoning. A models registry maintains approved models with known capabilities and costs. Teams can select appropriate models for different tasks: fast models for simple changes, powerful models for complex reasoning.

Execution Layer

— Cloud agents run parallel build tasks. An orchestrating agent coordinates their work, managing dependencies and resolving conflicts. Agents push code directly to GitHub, triggering CI/CD pipelines. Long-running agents provide progress updates in Slack and Jira.

Registries

— Centralised registries maintain versioned artefacts: specifications that define agent behaviour, container images for consistent environments, IDE plugins that extend functionality. Everything is versioned, everything is code.

Integration Points

— The architecture connects at multiple levels: IDEs talk to MCP servers for context, models for intelligence, and orchestrators for execution. Registries feed specs, containers, and plugins to local environments. GitHub triggers cloud agents. Everything flows through well-defined interfaces.

Pillar 3: New Ways of Working

The shift to agentic development requires rethinking how teams operate. Roles evolve, workflows change, and new collaboration patterns emerge.

Humans focus on strategic work.

Product managers define problems worth solving. Designers perfect user experiences. Engineers architect systems and write specifications. The creative, high-judgment work stays human. The repetitive, well-defined work moves to agents.

The agile paradigm breaks.

Traditional ceremonies designed for 2-week human coordination don't work for 1-hour agent cycles. Sprint planning shifts from estimating implementation to prioritising problems. Standups disappear into continuous async updates. Reviews focus on outcomes not code quality. Retrospectives move from sprint execution to platform improvements. Coordination ceremonies become decision forums. Synchronous time becomes precious—reserved for ambiguous decisions and strategic pivots. Optimising flow is paramount.

Agent In The Loop replaces Human In The Loop.

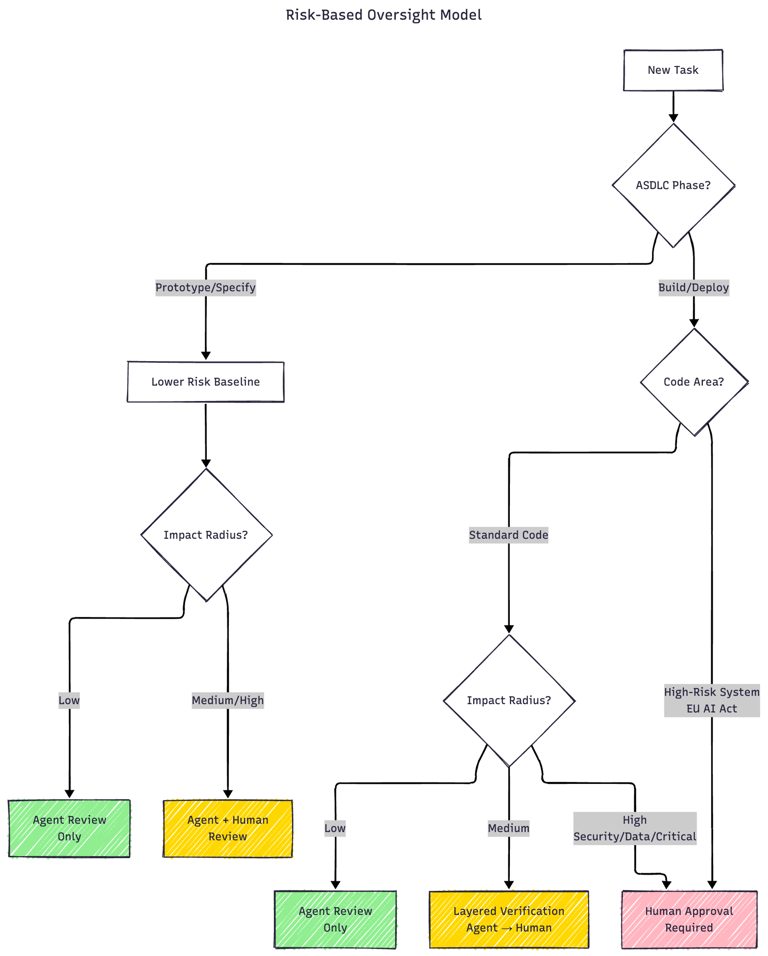

The default shifts from humans reviewing all agent work to agents reviewing other agents' work, with humans involved based on risk. Risk varies across three dimensions: ASDLC phase (e.g. Prototype allows more autonomy; Deploy demands stricter oversight), code area (e.g. high-risk systems as defined in EU AI Act require closer review), and impact radius (e.g. security changes, sensitive data handling, critical systems trigger agent escalation to humans). Low-risk work proceeds autonomously. High-risk work gets layered verification: agent review first, human approval final.

Specifications become the primary work product.

Writing clear specs matters even more than they do now when writing for autonomous agents, so teams must invest time perfecting them. Spec reviews replace some code reviews. The ability to specify intent precisely becomes a core skill.

Trust becomes the key metric.

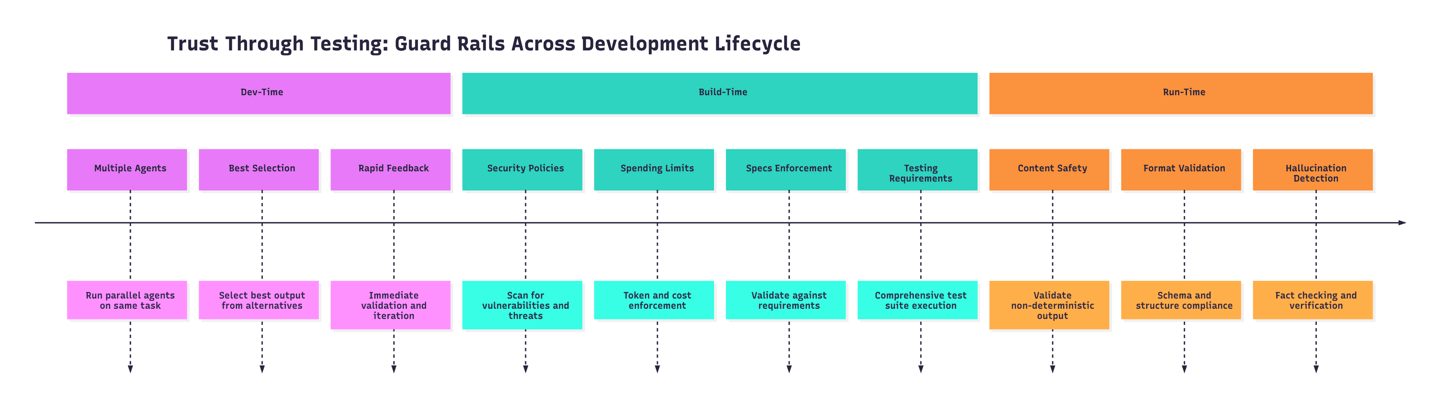

When agents write code, trust shifts from "who wrote it" to "does it pass comprehensive tests". Trust becomes measurable: test coverage, pass rates, defect escape rates, security scan results, rollback frequency. Guard rails make trust operational across three timeframes: dev-time (run multiple agents on same task, select best output), build-time (security policies, spending limits, specs enforcement, testing requirements), and run-time (validate each non-deterministic output for content safety, format, hallucination detection). Test to trust. Then trust the code.

Feedback loops tighten.

Prototypes appear in hours. Specifications clarify in hours. Implementations complete in days. Deployments happen in minutes. The entire cycle compresses. Learning accelerates. Teams can quickly see the impact of their work upon users. Product management becomes hypothesis-based testing.

Work decomposes differently.

Start with architectural decomposition: break repositories into smallest possible components that can be worked on independently, decompose UIs into components that composite independently. Map tasks and their context to these components. Then size tasks to fit autonomy horizons—one-hour tasks execute reliably. Build safeguards into parts that resist decomposition: build pipelines, UI-to-backend queries, database calls. These integration points become bottlenecks if not protected. The decomposition hierarchy flows: architecture → components → tasks → subtasks within autonomy limits.

Pillar 4: Success Metrics

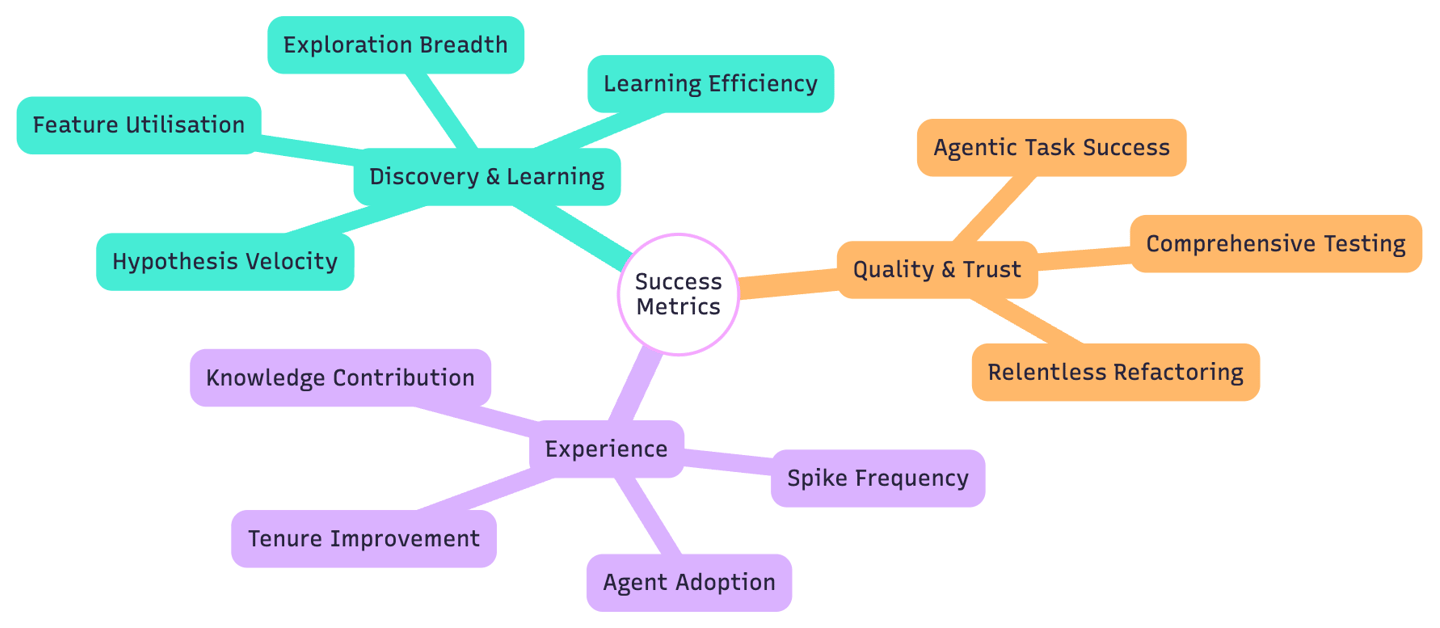

Success manifests across three dimensions: discovery & learning, quality & trust, and experience. Each dimension combines quantitative measurements with qualitative assessment.

Discovery & Learning Metrics

If building code is cheap, the key skill is deciding what to build. How good are we at making that decision?

Hypothesis velocity: Hypotheses tested per week (target: 5-10 validated or invalidated, signals we're learning fast and making evidence-based decisions)

Exploration breadth: Alternative approaches prototyped before committing (target: 3+ variations per major decision, signals we're avoiding premature convergence)

Learning efficiency: Insights gained per experiment (target: maximise signal from minimal code, signals we're designing sharp experiments)

Feature utilisation rate: Percentage of shipped features actually used by users (target: >80%, signals better specification alignment)

Quality & Trust Metrics

When code is unlimited, you no longer need to choose between quality and velocity. Create trust through demonstrating quality.

Agentic task success rate: Tasks completed successfully end-to-end (target: 90%+, signals effective decomposition, agent orchestration and specification clarity)

Comprehensive test coverage: Automated verification across business dimensions: functional correctness, intent alignment, policy compliance (security, spending, specs), and data safety (target: 95%+, signals testing extends beyond code to behaviour, intent, and safety)

Relentless refactoring: Percentage of code changes that are improvements vs new features (target: 15-25%, signals we optimise freely when generation is cheap without destabilising the codebase)

Experience Metrics

How do people feel about their work?

Agent adoption: Percentage of eligible tasks where engineers choose to use agents (target: 80%+, signals people prefer working with agents)

Spike frequency: Technical spikes per person per quarter (target: 4+, signals time available for exploration during working hours)

Knowledge contribution rate: Contributions to internal/external knowledge repos per person per quarter (target: 6+, signals engagement and willingness to share learnings)

Tenure improvement: Average tenure in role, company (target: increasing, signals reduced burnout and higher job satisfaction)

Conclusion: What This Means in Practice

As we’ve seen, the promise of AI-augmented software development is emphatically not to do the same amount of work with fewer people. Instead, the promise of near-unlimited code is to remove highly skilled and highly complex work of code generation as a bottleneck. Instead, we can focus on solving problems. This is what it means in practice:

The metrics described track the transformation in daily experience for everyone building software: developers, designers, product managers. The focus shifts from repetition to creativity, from interruption to flow, from ambiguity to clarity.

Agents handle boilerplate code, test generation, configuration updates, and documentation maintenance. Developers reclaim time for architecture decisions, algorithm design, and user experience challenges. Product managers create high-fidelity prototypes directly. Stakeholder feedback arrives days earlier. Fewer cycles wasted building the wrong thing.

Specifications eliminate ambiguity. Tests run continuously. Validation happens in real-time. Developers know immediately if something breaks. Prototypes appear in hours. Implementations complete in days. Deployments happen in minutes. The entire cycle compresses.

Agents work asynchronously. Updates appear when convenient. Flow states last longer. Agents work overnight. Long-running builds don't block developers. Deploy on Friday without weekend anxiety. On-call becomes less stressful through better monitoring, clearer logs, faster fixes. Specifications define scope clearly. Tests validate completeness. Less uncertainty. Less stress. More confidence that work will succeed.

More opportunities for creativity. Faster deployment. Faster feedback. Flow from idea to impact.